Building the Engine #1: Finding the Truth in a Sea of Bad Actors

newsiphy·@greer184·

0.000 HBDBuilding the Engine #1: Finding the Truth in a Sea of Bad Actors

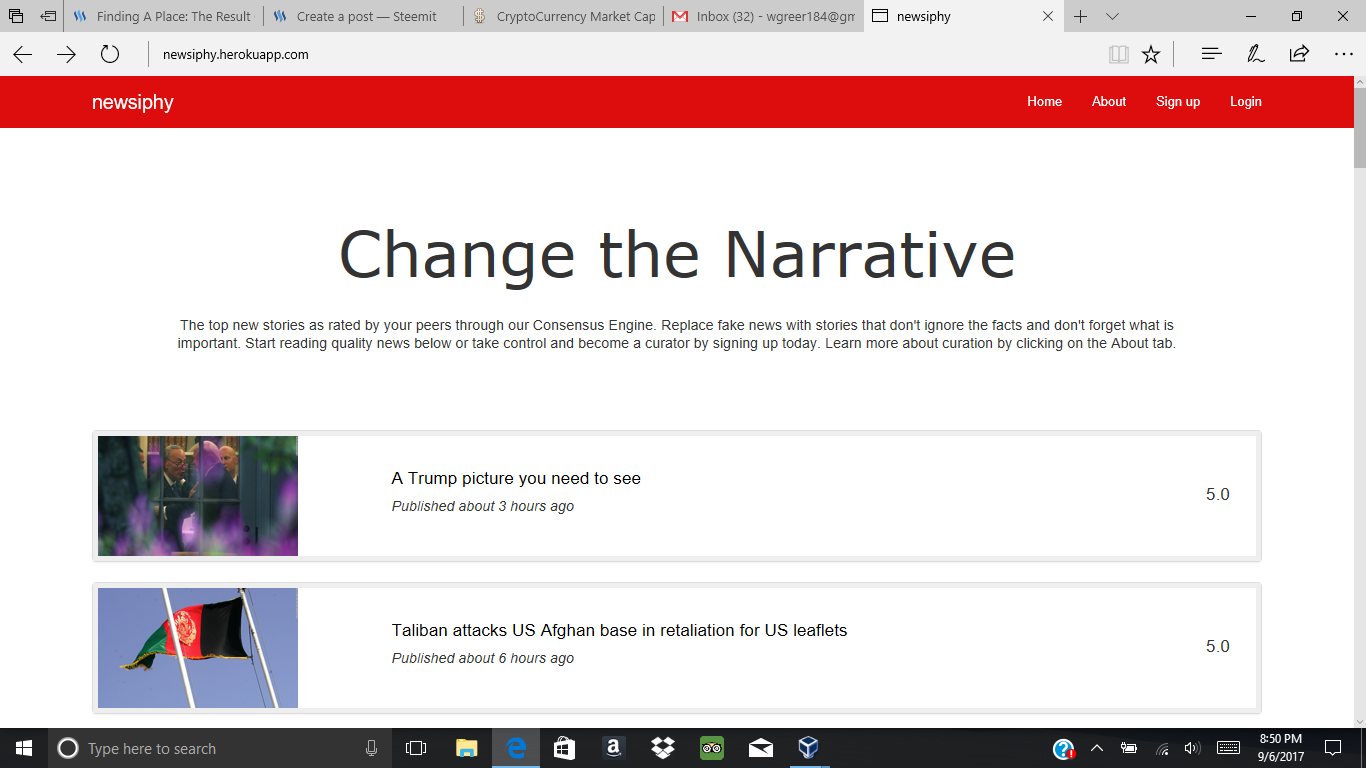

I have written zero posts about programming and the stuff I work on outside of Steemit. Today, I'm going to describe on the project I was been working on since May on and off in my free time. The goal the Newsiphy project was to find a way to address the fake news problem in a way that gave users a sense that they still had control over the process. Here's how I attempted to solve that problem. <center>  <h6><em>A screenshot of the home page of my website</em></h6> </center> #### Why Fake News? I wanted to tackle an actual issue that people were complaining about and one in which I thought the response would be incorrect. I have written several articles on political topics on why people tend to hold biased views and why fake news is propagated from these views. I have also addressed why traditional machine learning methodologies have issues finding fake news because it is so hard to tell what fake news is in the first place. Many people have complained about the algorithms in terms of how YouTube finds and removes bad content and we can only assume Facebook would find a way to mess up the issue. The issue in building models is that you need two sets of data, examples of fake news and examples of actual news. The issue here is that the conception of fake news maybe be different from the Silicon Valley data scientist than it is for someone living in the rural Midwest. Both of their perspectives hold some valuable information, but the algorithms will only ever see one because the data scientist is compiling and classifying the datasets. Thus, I attempted to build a mechanism which would allow anyone to influence the news rather than use an algorithm which might be susceptible to certain biases outside the control of the algorithm itself. #### Some Inspiration Using some skills I had acquired during my years as a computer science undergrad, I quickly built a webpage that pulled articles from different RSS feeds from different politics sections from news sources across the web. Next, I wanted to find a way to rate these web pages and have the top pages display on the front page. You should be thinking right now that this sounds a lot like Reddit and Reddit served as the initial inspiration for this project. I used to visit /r/politics prior to the 2016 election. And I saw it turn from a liberal-leaning moderate site to progressive propaganda page over the course of the election. Interesting political thought and speculation turned into Trump hate. And their pages led to garbage news with an extra heaping of misleading narratives. I had come for political news and received political garbage. So I needed to find a way to solve the problems Reddit created. First, the new articles are automatically pulled from sites rather than bought by the users. I wanted the focus to be on new articles rather than all types of media and users tend to bring their biases with them, so I took that component away from the user. Second, I needed to build a system better than the simple upvoting system that Reddit used in order to protect what I consider the minority opinion. Or the opinion that would be downvoted out of existence on Reddit. #### The Consensus Engine Or The Neural Network With People Involved My solution to protecting minority opinions was to build a system that valued consensus or agreement across all individuals. Thus, I build a network of users that achieved this goal. First, was to use a 0-10 rating system. This gave a little nuance to people who wanted a little nuance (like me). Second, was to use a modified average with a penalty for disagreement. Thus, I used a modified version of the variance to impose a penalty for those articles that the most disagreement. Thus, the highest rated articles would be the ones that a very large majority rated highly and trickling down from there. Third, not everyone is at good at finding good content as other people. Thus, we adjust the rating of each user according to how accurate they are and how consistent they are. Accuracy is how close they are to predicting the final rating and consistency is how close they are to their typical behavior (in this case the typical difference from the final rating). Accurate and consistent users are given a higher proportion of the "reward" of each article. This "reward" means that their opinion means more in the calculation in future ratings. Their opinion is rated higher than an internet troll for example. Fourth, the system constantly updates and normalizes the weights given to each user as articles give out their rewards. Thus, people who participate in the system see their weight increase while those who do not participate see their weight decrease. This weight is known as the voting power because it gives their opinion more weight than people with lower voting powers. Fifth, in order to prevent people from rating an article after the dust has settled to maximize their voting power by using the current score as reference, the "reward" is scaled based on how soon a vote comes in. Early raters will receive a bonus for being an early rater. Sixth, an additional incentive to accumulate voting power is provided. A user can participate in occasional voting periods where they can determine proposed changes to the website. If there was lots of support to add a particular news feed, that feed might be put up for a vote. Given the information above, we can see that those who participate the most in the site get the most input over the site potential changes. Why do I consider this a neural network with people involved? Well each user serves as a potential neuron in a one-hidden layer network with a single input. The input is the news article. The output is the final rating. The hidden layer is composed of users who have unique non-linear activation functions (kind of, you have eleven different outputs, instead of two but you get the point). The Consensus Engine updates the weights based on accuracy of their predicted result (no backpropagation but updating weights based on a difference is a similar idea). Why is the idea better than a neural network in terms of fake news? We don't need to train the network on past examples. The network has already been trained by society. Sure, the weights might be off, but we remove the need for the dataset because the dataset is human experience which I will argue is less fragile than an artificially composed dataset by a select few individuals. #### Conclusion Is this a bad idea? I don't really know. I would love to get some feedback or ruthless criticism (I get enough "good jobs" from friends and family). I think that even if the whole site concept isn't the most useful (since I designed the site for a personal want), that some elements could be used in future projects and ideas. I just felt like sharing to share. #### Sources: [Visit the Webpage](https://newsiphy.herokuapp.com/) Image: My Computer