Steem Pressure #8: Power Up Your dApp. RethinkDB? KISS my ASAP.

hive-160391·@gtg·

0.000 HBDSteem Pressure #8: Power Up Your dApp. RethinkDB? KISS my ASAP.

While building your Steem blockchain dApp, you will soon realize that it’s not that easy to get the data you are looking for quickly, unless there’s already an API that gives you what you need.

<center>https://www.youtube.com/watch?v=7IkI6yVvzHI</center>

We have several reliable, general purpose, “full” API nodes, provided by Steemit Inc. and community members:

| Steem API endpoint | owner |

|---|---|

|https://api.steemit.com| Steemit Inc. |

|https://anyx.io| @anyx |

|https://api.steem.house| @gtg |

|https://steemd.minnowsupportproject.org| @followbtcnews |

|https://steemd.privex.io| @privex |

You can use them for development or even (if you are brave enough) for production.

If your dApp is small and simple, it might work. And yes, in many cases it will.

But is it optimal? No.

In previous episodes, I described different types of nodes and their names and how this can be misleading when it comes to the so-called “full” nodes.

TL;DR

A full node means here something different than in the Bitcoin realm, where Bitcoin full node is pretty much something that a Steem consensus node can do.

Here, on Steem, the word “full” doesn’t refer to anything related to the blockchain - it refers to the fully featured set of APIs enabled within steemd.

Do you really need a full set of API calls?

In most cases you don’t. To get an idea about the information you can get from common Steem APIs, take a look at the devportal:

https://developers.steem.io/apidefinitions/

<sup>By the way, please consider [voting](https://steemconnect.com/sign/update-proposal-votes?proposal_ids=[63]&approve=true) for [Steem.DAO @inertia’s proposal](/@inertia/proposal-dev-portal-documentation-q1-2020), who already greatly contributed to the Dev Portal Documentation and is eager to do more.</sup>

For example, if you need the information returned by `get_market_history`, take a look at `condenser_api.get_market_history` and at its underlying api, in which case you will find:

> Also see: `market_history_api.get_market_history`

Which means that the API node you need should be running a `market_history` plugin.

Another example is a situation in which you want to find who “Resteemed” (a.k.a. “Reblogged”) a given post.

Here, you are lucky, because there’s a method for that called `get_reblogged_by` and provided by the plugin `follow`.

Maybe you want to get tags used by a specific author? That’s easy too, there’s the `get_tags_used_by_author` method provided by - yes, you guessed correctly - `tags` plugin.

There’s a pretty big chance that if your app needs to use `get_market_history`, it doesn’t need `tags` or `follow` plugins, because it’s not blogging oriented, and vice-versa - if you deal with `tags` and `follow`, you probably don’t care about the internal market.

Such knowledge lets you optimize the API infrastructure needed for your dApp.

For instance, you can run a “fat node” without `market_history`, `account_history`(see [Steem Pressure #6](/@gtg/steem-pressure-6-mira-ymmv-rtfm-tldr-lgtm)) and use [hivemind](https://github.com/steemit/hivemind) to get the `tags` and `follow` features.

That’s not the end yet. You get a lot more data than you’ve asked for, but still that may not be what you need.

Do you know why? If not, then please, read the first paragraph again.

How many blocks has the witness `gtg` missed so far?

That’s easy.

Run:

```

curl -s --data '{"jsonrpc":"2.0", "method":"database_api.find_witnesses", "params": {"owners":["gtg"]}, "id":1}' https://api.steem.house

```

You will instantly get your answer, because the `find_witnesses` method returns this information… among many pieces of information that you don’t need.

Now, try something more complicated:

### Problem to solve

How many blocks did @gtg produce until block 40000000?

And how many blocks did @gtg produce in August 2017?

And how many blocks did he produce that contained more than 50 transactions?

And what percentage of blocks produced in 2018 had more non-virtual operations than transactions?

How many blocks had only one transaction and which of them had the highest number of non-virtual operations? What is the id of the transaction that contains them?

You should be able to answer all these questions, all the data are in the blockchain after all. The problem is that steemd has no suitable API to answer these questions quickly.

This means that you either have access to a third party service / API that can answer these questions (see @arcange’s [SteemSQL](https://steemsql.com) “SQL server database with all Steemit blockchain data”, which is available in subscription model), or look into blocks to find your answer.

In the latter case, you will have to inspect all the blocks ever produced using the `get_block` method.

The good news is that every consensus node can run the `block_api` plugin.

The Steem blockchain has recently passed the 40 million blocks mark.

This means that in order to answer the first question, you will need to send more than 40 million requests to the API endpoint.

Each time this type of question is asked you will need to repeat the procedure, unless you…

### Build and run your own custom Steem API

<center>

</center>

<sub>TL;DR in Memeglish so that project managers can get it.</sub>

No. I’m not going to build an API in this post.

It is supposed to be **your own** API, solving **your** problems, optimized for **your** use case.

Instead, I’m going to play with some blockchain data to give you some tips.

To illustrate this, let’s analyze the problem described above. .

Let’s ignore incoming blocks and keep our focus in the range up to 40000000 blocks (catching up with the head block and dealing with micro-forks is a good topic for another episode; in the meantime, you can take a look at how hivemind does this).

Let’s assume that you can’t or don’t want to extend steemd with your custom `fancy_plugin` or a `fancy_plugin_api` that does the job.

# Keep It Simple Stupid

We don’t need information about virtual operations, so instead of using `get_ops_in_block`, which requires `account_history` plugin and API, we can use `get_block`, which saves a lot of resources, if you want to run your own node.

#### How does a request look?

```

curl -s --data '{"jsonrpc":"2.0", "method":"block_api.get_block", "params":{"block_num":197696}, "id":1}'

```

#### How does a response look?

```

{

"jsonrpc": "2.0",

"result": {

"block": {

"previous": "0003043f5746b4d9d06932467ad852ac5d71231c",

"timestamp": "2016-03-31T13:56:18",

"witness": "sminer22",

"transaction_merkle_root": "63321e3f001bed17d301399c5eeaa9b37a0bf74b",

"extensions": [],

"witness_signature": "1f64e94d51baef0b84be04644fafc0b05959e80db64de7b099d00499587f23f97216acd3d8df3c3b81f672b24f8cf47167720d205693d3f5713a65bcf004cffef3",

"transactions": [

{

"ref_block_num": 1087,

"ref_block_prefix": 3652470359,

"expiration": "2016-03-31T13:56:45",

"operations": [

{

"type": "vote_operation",

"value": {

"voter": "proskynneo",

"author": "proskynneo",

"permlink": "steemit-firstpost-1",

"weight": 10000

}

}

],

"extensions": [],

"signatures": [

"202765ea6b688c2ce968ff2b3f1f6715fddd67374f409a202ae720ca072cb6a919175c27921edfc24c5e3d898a221e88395af31a155b1d60b2bd5475d30a30e226"

]

}

],

"block_id": "00030440d0fbfc8323a1388cd009f2a7f9f43162",

"signing_key": "STM6tC4qRjUPKmkqkug5DvSgkeND5DHhnfr3XTgpp4b4nejMEwn9k",

"transaction_ids": [

"679bcc6414b2f2bd16818a83f020bb9a478a84c4"

]

}

},

"id": 1

}

```

<sup>Well, when pretty printed for your convenience, API normally returns a compact version, which is just one line without extra whitespaces.</sup>

#### Caveat

As you can see, the resulting data don’t contain `block_number`, but you can use the `id` that equals the block number you are asking for.

We need to get 40M blocks.

That’s a lot, but fortunately you need to do this only once.

Irreversible blocks are not going to change because… well, I hope you can guess why ;-)

Even if you use [jussi’s batch requests](https://developers.steem.io/services/#json-rpc-batch), throwing that many requests at public API nodes will quickly trigger rate limiting, and even if it doesn’t, it will take a significant amount of time.

#### Your own consensus node for simple tasks

You can use your own consensus Steem node for that. Local means faster.

Think about latency and how any latency (`1ms`? `2ms`?) is no longer negligible if you multiply it by 40000000.

Such a node is not resource hungry, and with MIRA you can easily run it on a 8GB RAM machine with 500GB storage.

A consensus node currently needs `245GB` for `block_log` and `56GB` for the state file.

Besides, your dApp will benefit from such a node also for broadcasting purposes.

Configuration is as simple as that:

<center>https://cdn.steemitimages.com/DQmfJxAnAk2jvJMEoo3U3ivLDhie3vPvXxmSU8RfFLMrYcQ/config-ini.gif</center>

#### Use someone else’s hard work

There are already people (like me) who [serve the `block_log`](https://gtg.steem.house/get/blockchain/) to speed up replay times.

If only there were a place where one could bulk download JSON files with Steem blocks...

Well, if there’s a need for it, I can make it available with 1M block packages every time we reach 5 or 10 million.

It will save a lot of time for all microservices that require block processing (the last mile can be synced using traditional methods)

#### Bulk

You’ve already seen how a single block looks in JSON format.

Obviously, we are using compact output to save space.

The whole data set (40M blocks in the uncompressed JSON format) takes 580GB.

For our needs, we need only a small subset of such data.

To get what’s needed we can use `jq`:

```

jq -c '

{

id: .id,

block_id: .result.block.block_id,

timestamp: .result.block.timestamp,

witness: .result.block.witness,

txs: .result.block.transactions | length,

ops: [.result.block.transactions[].operations[]] | length,

txids: .result.block.transaction_ids

}'

```

After processing our blocks we get 46GB of uncompressed json data.

#### Everything Should Be Made as Simple as Possible, But Not Simpler

A flat file? Are you kidding me?

Use your favorite database to store it.

(Unless your favorite database is MongoDB, then use something else.)

#### Or maybe RethinkDB?

Some time ago @someguy123 told me about some fancy database designed to store JSON.

> _”RethinkDB is the first open-source, scalable JSON database built from the ground up for the realtime web. It inverts the traditional database architecture by exposing an exciting new access model - instead of polling for changes, the developer can tell RethinkDB to continuously push updated query results to applications in realtime. RethinkDB’s realtime push architecture dramatically reduces the time and effort necessary to build scalable realtime apps.”_

So this not only means effective and effortless storage of JSON data, but also sounds like a perfect choice for many Steem dApps.

But is it? Maybe. For some needs. It’s not perfect for sure, but no solution is.

I’m going to give it a try.

Do your own research. Maybe it will fit your needs. If not, look for something else.

Let’s see how easy it is to store data we need in RethinkDB

##### Step 1: Install RethinkDB

Check the RethinkDB webpage for [installation instructions](https://rethinkdb.com/docs/install/) suitable for your server.

For Debian or Ubuntu, it’s enough to do the usual:

###### Add RethinkDB repository

```

echo "deb https://download.rethinkdb.com/apt `lsb_release -cs` main" | sudo tee /etc/apt/sources.list.d/rethinkdb.list

wget -qO- https://download.rethinkdb.com/apt/pubkey.gpg | sudo apt-key add -

sudo apt-get update

```

###### Install RethinkDB package

```

sudo apt-get install rethinkdb

```

That’s all. No questions asked.

###### Install the RethinkDB python driver

Because the `rethinkdb import` subcommand has - surprisingly - an external dependency on the python driver, depending on the way you installed RethinkDB, you might need to get the RethinkDB python driver too.

For that, you need to have `pip`, and, ideally, `virtualenv`, so if you don’t then:

```

sudo apt-get install python3-pip python3-venv

```

and once you have them, install the RethinkDB python driver into your virtual environment:

```

python3 -m venv ./rethink

source rethink/bin/activate

pip install rethinkdb

```

##### Step 2: Run the server

`rethinkdb`

Yes. That’s it for now. You can play with more advanced features later.

It enables an administrative console available through HTTP on `localhost:8080`

##### Step 3: Load your data.

Throw your JSON data at RethinkDB instance by running:

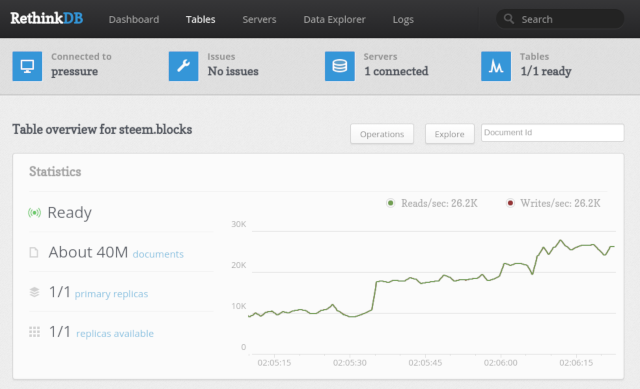

`rethinkdb import -f blockstream.json --table steem.blocks`

Where `blockstream.json` is your big 46GB file with data from 40M blocks.

It goes to a table called `blocks` within a database called `steem`.

```

[========================================] 100%

40000000 rows imported to 1 table in 3615.68 secs

Done (3615 seconds)

```

Not bad, on my hardware it consumes json stream a little bit faster than what a 100Mbps link could provide.

`rethinkdb_data` takes 66GB

<center>

</center>

##### Step 5: Make use of the data

You can use the Data Explorer feature from the administrative console, or use Python, Javascript or Ruby to get the data you are looking for.

If you want to learn more look at the [RethinkDB documentation](https://rethinkdb.com/docs)

Depending on your needs, you can create and use secondary indexes to speed up your queries.

#### Or...

Use a different set of tools and solutions that meet your needs.

A less exotic solution is to use MySQL that supports a native JSON data type, which combined with generated columns might be a good alternative.

(I’m going to try that too.)

# Previous episodes of Steem Pressure series

<center>https://www.youtube.com/watch?v=YRwHrVVU2NA</center>

[Introducing: Steem Pressure #1](/@gtg/introducing-steem-pressure-1)

[Steem Pressure #2 - Toys for Boys and Girls](/@gtg/steem-pressure-2-toys-for-boys-and-girls)

[Steem Pressure #3 - Steem Node 101](/@gtg/steem-pressure-3-steem-node-101)

[Steem Pressure: The Movie ;-)](/@gtg/steem-pressure-the-movie)

[Steem Pressure #4 - Need for Speed](/@gtg/steem-pressure-4-need-for-speed)

[Steem Pressure #5 - Run, Block, Run!](/@gtg/steem-pressure-5-run-block-run)

[Steem Pressure #6 - MIRA: YMMV, RTFM, TLDR: LGTM](/@gtg/steem-pressure-6-mira-ymmv-rtfm-tldr-lgtm)

[Steem Pressure #7 - Go Fork Yourself!](/@gtg/steem-pressure-7-go-fork-yourself-step-by-step-guide-to-building-and-setting-up-a-mira-powered-hf21-ready-steem-consensus-node)

Stay tuned for next episodes of Steem Pressure :-)

---

<sup>

If you believe I can be of value to Steem, please vote for me ([**gtg**](/@gtg)) as a witness.

Use [Steemit's Witnesses List](https://steemitwallet.com/~witnesses) or [SteemConnect](https://steemconnect.com/sign/account-witness-vote?witness=gtg&approve=1)

Or if you trust my choice you can set [**gtg**](/@gtg) as a proxy that will vote for witnesses for you.

***Your vote does matter!***

You can contact me directly on [steem.chat](https://steem.chat), as [Gandalf](https://steem.chat/direct/gandalf)

</sup>

<center>

https://steemitimages.com/DQmSheuDfCHizk1xvHPcrFjQNKfBzgun9UXDxdEp6JJCum9/steem_wide.png

[Steem On](/)

</center>👍 moromaro, gmlgang, bitsharesorg, cryptogeorge21, sho-t, bpcvoter, arfa07, joythewanderer, etherpunk, herbacianymag, zimnaherbata, ew-and-patterns, flygoing, lemouth, revisesociology, omstavan, themarkymark, buildawhale, upmyvote, shadowspub, jrcornel, gerber, daan, exyle, bubke, accelerator, cadawg, bestboom, dlike, triptolemus, bobby.madagascar, permaculturedude, steem.leo, leo.voter, zaku-pal, leo.syndication, techken, blockbrothers, bengy, investprosper, earlmonk, steemindian, fsm-liquid, triplea.bot, zaku-leo, maxuva, maxuvd, dirapa, laissez-faire, bilpcoinpower, lloyddavis, msg768, jacekw, leveragetrading, slobberchops, eii, anmitsu, bingbabe, dismayedworld, sm-skynet, alexusbrave, rozku, coinmarketcal, mangos, alexsandr, minersean, mahdiyari, tubcat, hijosdelhombre, debtfreein2, sbi4, decentral, cryptocurator, kinakomochi, highonthehog, tr777, yoshiko, fukako, afrog, wajahatsardar, steemit-jp, sarmaticus, variola, lupafilotaxia, thevil, smon-fan, sm-jewel, tr77, smonbear, steemitbae, sumomo, alaiza, fujisan, yusaku, jp-tiger, sarmagames, kerkyra, meesterboom, backinblackdevil, roelandp, sazbird, hanshotfirst, b00m, smoner, smonian, jjangjjanggirl, techslut, skye1, skylinebuds, jphamer1, bpcvoter1, didic, michealb, arcange, jamesbrown, notdavemate, realestatecoach, justyy, moneybaby, rafalski, holger80, tarazkp, babytarazkp, raphaelle, cwow2, raymonjohnstone, inertia, freddio.sport, gtg, blocktrades, liondani, tomatom, anomalogy, bilpcoinrecords, reghunter, gandalf, bpcvoter2, akmalz, bocik, acidyo, rahul.stan, steeminator3000, mister-meeseeks, bilpcoinbpc, britcoins, kimchi-king, bloom, cryptoninja.guru, wwwfernand, bookkeeping, abh12345, jayna, getup, japasep, theycallmedan, briggsy, argentsilver, marcon, myslnarodowa, narodowcy.net, wisbeech, dinamo, fiveboringgames, bestofreddit, celinavisaez, steemtruth, karencarrens, okkiedot, kevinwong, michelmake, revise.leo, jeffjagoe, sportschain, jagoe, kicchudop1434, morph, squidben1, logic, bpcvoter3, jamzed, senku, titoncp, aoecoin, venturecapitalvc, conceptmachine, apasia.tech, open-asset, khunfarang, tetrahedron96, tamito0201, katrina-ariel, nacoche, borran, thrasher666, bugavi, abojasim880, massivevibration, htann, motadori, berlinmoonlight, nakedtrader, jvpengine, lifetoday, asforex, drsensor, danotanot, bonzopoe, tartanium, fortivitia, slimak, alebeta, terrara, vezo, roboton, crokkon, muskari, troziut, jeff-kubitz, jkkim, doremifa, ola-haukland, apalent, lorexpler, ellaaa, nivididia, bargolis, wilfredn, imperfect-one, tarachan99, seanlloyd, sallyfun, polipolina, galorum, daynewright, ebryans, garethnelsonuk, dadawada, rsmartt777, pshiploo, kork75, dlapalabita, afiqsejuk, astromaniak, tom1291, never-giveup, anthonyadavisii, blewitt, dianation, mrstaf, khunpoom, wishmaiden, drakos, teamashen, steembet.asia, r0g1, travoved, cardboard, profitcheck, lordneroo, shannonsalter334, asgarth, boykeren, kenny-crane, yehey, alcik, tnflp, treasure-chest, enforcer48, georgeboya, goumao, wf9877, detlev, fisnikk, janusface, sqljoker, seckorama, derangedvisions, faitherz33, zaxan-blog, marlians.ccc, marlians.photo, derangedcontests, deranged.coin, atanas007, vndragon, rarediamond, kabolo, tudors, jexus77, eveuncovered, peak.moderator, eprolific, blakemag, el-deportista02, witnesspage, trioboyz, favcau, britvr, dimissa, judasp, georgecri80, bravedevelopment, nurainiwahab, jungch98, cloris, leesujin, igl00, cazing, timcliff, mini-v, and2300, v4vapid, jkramer, lyon89, m31, yoogyart, good-karma, adialam, azirgraff, machroezar, dodolzk, yayan, ekafao, tongchat27, mini-zephalexia, zisan012, teenovision, harvhat, shogo, chesatochi, pedrohmc23, schlafhacking, arrit, joele, arenatoken, lirdin, elgeko, aceh1001art, sigizzang, netuoso, paulnulty, zaerif, rofilm, jocieprosza, schattenjaeger, zacknorman97, astil.codex, steempeak, marcusantoniu26, mycoyote, steemzzang, smooth, jerge, watchlist, drax, kharma.scribbles, steem.newz, bhagoley, kiwiscanfly, project007.dblog, promo.bot, for91days, sanjeevm, greekgodx, kubak, suci, geekgirl, bewarecenterbase, selfvotejustice, accountsale, scc664, senorita01, cinefilm, wackou, spikykevin, pkocjan, cheer-up, hjmarseille, elmundodexao, borislavzlatanov, dylanhobalart, dimon14, benicents, meandmyfam, faissalchahid, steemonboarder, markkujantunen, vampire-steem, alexei83, witcher73, pawelc, disguarpe, kuberman, qazwsx, steevc, edb, mildredcat, luangelxd, konti, s3rg3, sarakey, okean123, avisk, jennyzer, ace108, wongbraling, bernardr34, techcoderx, mariela53, sugarbun, preparedwombat, ewi5000, jokersword, steemchiller, oguzhangazi, coinmeria, livinguktaiwan, masirwin, daudimitch, craigahamilton, cbdoilstore, upvotenev, geoffrey, benitrade, yuriks2000, anderson69, urdreamscometrue, thebluepanda, emrebeyler, knifer, bigmoneyman, wira8788, lenmar, sniper555, mys, whd, steem-hikers, steem-hikers-pl, beleg, archetypal, ctime, kacperski, sportbot, bluesniper, hankreirden, sultan-aceh, kaeserotor, justynatina, shai-2, masterthematrix, rashidmunir, jeanpi1908, j-p-bs, yarak, gulf41, rocksg, foxkoit, kotturinn, jakiro12, steem-ambassador, starkerz, tj4real, milagros, leotrap, dokter-purnama, khusairi, herryazmi11, steemit-uruguay, exhibition, tinyhousecryptos, sacrosanct, sireh, yohan2on, littledisciples, busy.pay, bait002, meemee, shaotech, tiababi, sekhet, sonder-an, santoninoatocha, bflanagin, jamesbattler, anarcotech, leynedayana, kesolink, johnathan69, yogaworld, nairadaddy, raymondbruce, kingsolo, kilianparadise, steemitcanarias, megaraz, drayling, cauac, ddrfr33k, melvin7, azaaaboys, dwiesiekiery, gogo777, joselo92, transisto, likwid, fourfourfun, promobot, paul3080, pakganern, piotrgrafik, bobinson, angelaraquel, juliiana, dotwin1981, gamer0815, kaldewei, upvote100, grecki-bazar-ewy, pl-kuchnia, blablasteem, oaldamster, edrienaraylugo, chrisda, vf427, russianalcoholic, taoyuanming, hansgans, votebetting, upfundme, ufm.pay, ufm.reserve, tmps, t50, tm50, ufmbot, edzuxxx, rfrancy, soyunasantacruz, tonystarkalive, aaronpake, tafsilot, pnc, pastzam, areebafatima, iamujjwaladitya, andrewma, hilarymedina, saifalizaif, thelogicaldude, nkilicoglu, nongvo.piy, moredecisive, mahirbd, mansi94, sharif075, ozannn, miguellopez, jixue, seminaxyz, anilk571, mahabub1995, afza, ruble123, pboulet, blockchainstudio, leancenter, adiakula, titan-c, izhar92, vcdragon, lowkeyperson, hitless, yorvygarcia, kaptainkrayola, suzn.poudel, k0stil, jjg, gradzio, moebiusdroste, taxi.ninja, yoginiofoz, mammasitta, risha15, jingdol, yefersonx, coastline17, lord-geraldi, adelepazani, francescomai, kevinkutta, stefano.massari, hendrixixi, phremind, bettman, steamsteem, stuffbyspencer, cesinfenianos, iyushthakur, institutdpos, lorieee, kingsmind, bayron, donald.porter, speedracer, zakia, sn0n, steemit.usd, albertowhite, connor-russo, pasle, kheroh, puffi, wirago, oo7harv, emmaisimmaso, angel33, jumman, dobartim, bue, paragism, sayemrahman, madeyanell, finanzwolf, practicaleric, mattmcguire, steemistifier, juank16, pneumaluna82, alexisemmanuel, steemflagrewards, pixel-anais, sandra16, whatsup, steemfeed, freebornsociety, adsup, dyrt88, gpcx86, kurzer84, kazi799, deepresearch, solominer, helpiecake, eonwarped, eonwarp, dopal, jalentakesphotos, crimsonclad, clayboyn, r0nd0n, bigdizzle91, blarchive, wesphilbin, helpie, redrica, jlsplatts, kernelillo, mountainjewel, zipporah, big-m, veckinon, bembelmaniac, musicvoter, kbr, shookriya, kantos, lillywilton, zainenn, derosnec, paulag, guchtere, infamousit, meno, angelro, socent, pechichemena, luisferchav, soulturtle, hazem91, spawnband, doomsdaychassis, lilacse, javicuesta, free-reign, frejafri, ashikstd, a0i, winifredhiggs, princessamber, ghostdylan, isiahwalt, twanz, mxgstudios, suriel3000, musicvoter2, discordiant, bluefinstudios, markaustin, followbtcnews, smalltall, macchiata, slacktmusic, lightcaptured, foxyspirit, citimillz, trevorpetrie, raoufwilly, camuel, cryptofuwealth, androshchuk, anarcho-andrei, bennettitalia, warpedpoetic, ggd3yydze, yomismosoy, kafupraise, evecab, esthersanchez, supsnehal, orlandogonzalez, healer2678, hayalet, haylike, firesteem, abusamad, jasuly, srikandi, topoisomerase, bukfast, jaki01, kobold-djawa, davidorcamuriel, endracsho, icuz, lintang, steem-queen, junioro, waybeyondpadthai, kate-hudson, mgszamanvlog, reiseamateur, andablackwidow, kanchana, daree23, cambalache, yosra.yusefi, yewhos, guest4test4,